Benchmark Results

Prompt Refiner's effectiveness has been validated through 3 comprehensive benchmark suites covering function calling optimization, RAG applications, and performance.

Available Benchmarks

⭐ Function Calling Benchmark

SchemaCompressor tested on 20 real-world API schemas achieving 56.9% average token reduction with 100% lossless compression.

Jump to Function Calling Benchmark →

📚 RAG & Text Optimization

Comprehensive A/B testing on 30 real-world test cases measuring 5-15% token reduction and response quality preservation.

⚡ Latency & Performance

Performance testing measuring processing overhead - < 0.5ms per 1k tokens.

Function Calling Benchmark

SchemaCompressor was rigorously tested on 20 production API schemas from industry-leading platforms including Stripe, Salesforce, HubSpot, Slack, OpenAI, Anthropic, Google Calendar, Notion, and more.

Results Summary

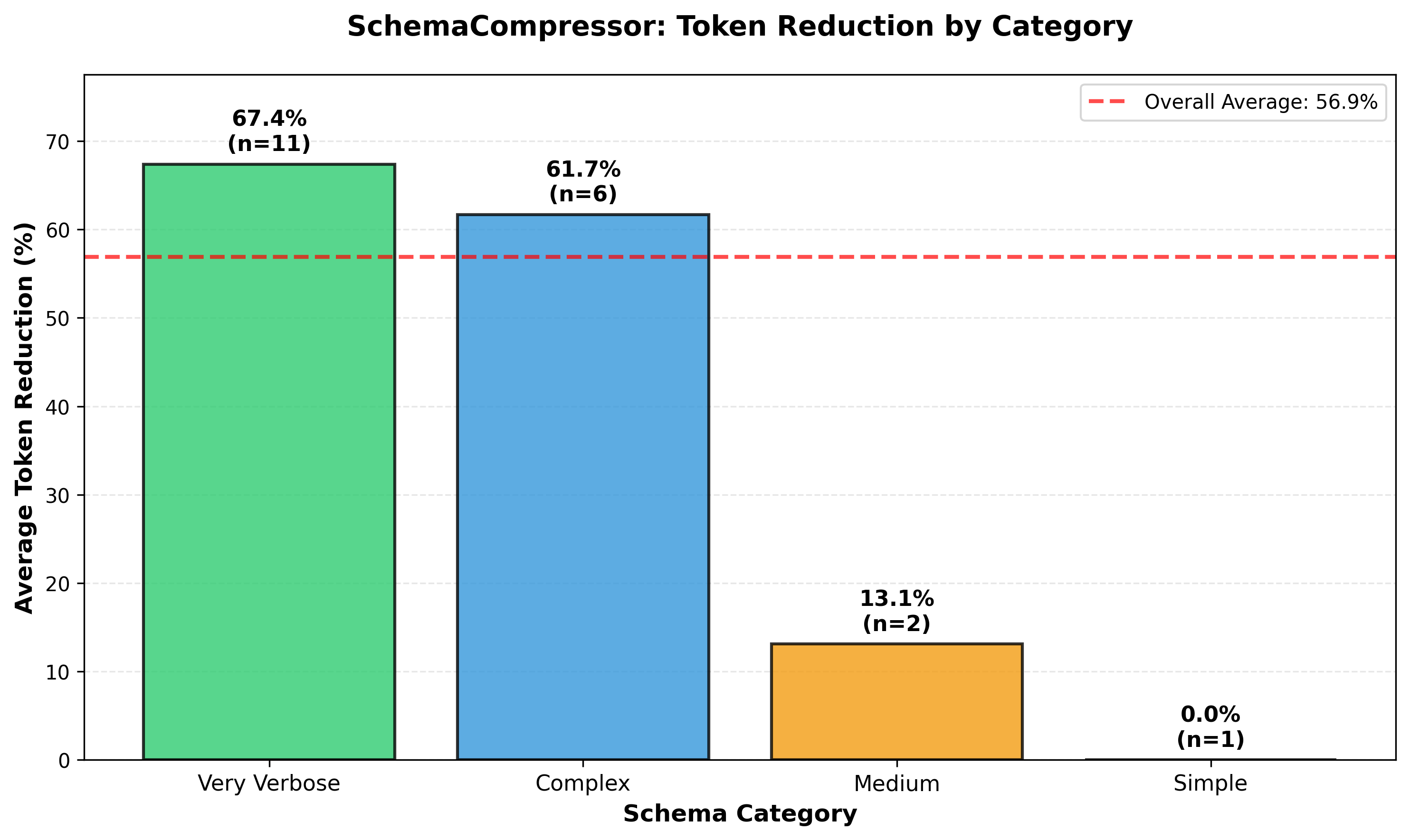

| Category | Schemas | Avg Reduction | Top Performer |

|---|---|---|---|

| Very Verbose (Enterprise APIs) | 11 | 67.4% | HubSpot Contact: 73.2% |

| Complex (Rich APIs) | 6 | 61.7% | Slack Messaging: 70.8% |

| Medium (Standard APIs) | 2 | 13.1% | Weather API: 20.1% |

| Simple (Minimal APIs) | 1 | 0.0% | Calculator (already minimal) |

| Overall Average | 20 | 56.9% | — |

Key Highlights

- ✨ 56.9% average reduction - 15,342 tokens saved across all schemas

- 🔒 100% lossless compression - All protocol fields preserved (name, type, required, enum)

- ✅ 100% callable (20/20 validated) - All compressed schemas work correctly with OpenAI function calling

- 🏢 Enterprise APIs see 70%+ reduction - HubSpot (73.2%), OpenAI File Search (72.9%), Salesforce (72.1%)

- 📊 Real-world schemas - Production APIs from Stripe, Slack, Twilio, SendGrid, etc.

- ⚡ Zero API cost - Local processing with tiktoken

Functional Validation

We tested all 20 compressed schemas with real OpenAI function calling to prove they work correctly:

| Category | Schemas | Identical Calls | Different Args (Valid) | Callable Rate |

|---|---|---|---|---|

| Simple | 1 | 1 (100%) | 0 | 100% |

| Medium | 4 | 4 (100%) | 0 | 100% |

| Complex | 6 | 4 (67%) | 2 (33%) | 100% |

| Very Verbose | 9 | 3 (33%) | 6 (67%) | 100% |

| Overall | 20 | 12 (60%) | 8 (40%) | 100% |

Key Findings:

- ✅ 100% callable (20/20): Every compressed schema successfully triggers function calls

- ✅ 100% structurally valid: All function names, types, required fields preserved

- ✅ 60% identical (12/20): Majority produce exactly the same function call

- ⚠️ 40% different but valid (8/20): Compressed descriptions influence LLM choices

- Different default values chosen (num_results: 10 → 5, time_range: past_month → any)

- Different placeholder values (database: 'production' → 'your_database_name')

- Different optional fields populated (location: 'Zoom' → 'Conference Room A')

- All differences use valid enum/type values - schemas remain functionally correct

Bottom Line: Compression doesn't break schemas - it's 100% safe for production use.

Top Performing Schemas

- HubSpot Contact Creation: 2,157 → 578 tokens (73.2% reduction)

- OpenAI File Search: 2,019 → 548 tokens (72.9% reduction)

- Salesforce Account Creation: 2,157 → 602 tokens (72.1% reduction)

- Slack Send Message: 979 → 286 tokens (70.8% reduction)

- Anthropic Computer Use: 1,598 → 471 tokens (70.5% reduction)

Visualizations

Token Reduction by Category

Complex and enterprise APIs achieve 60-70%+ token reduction

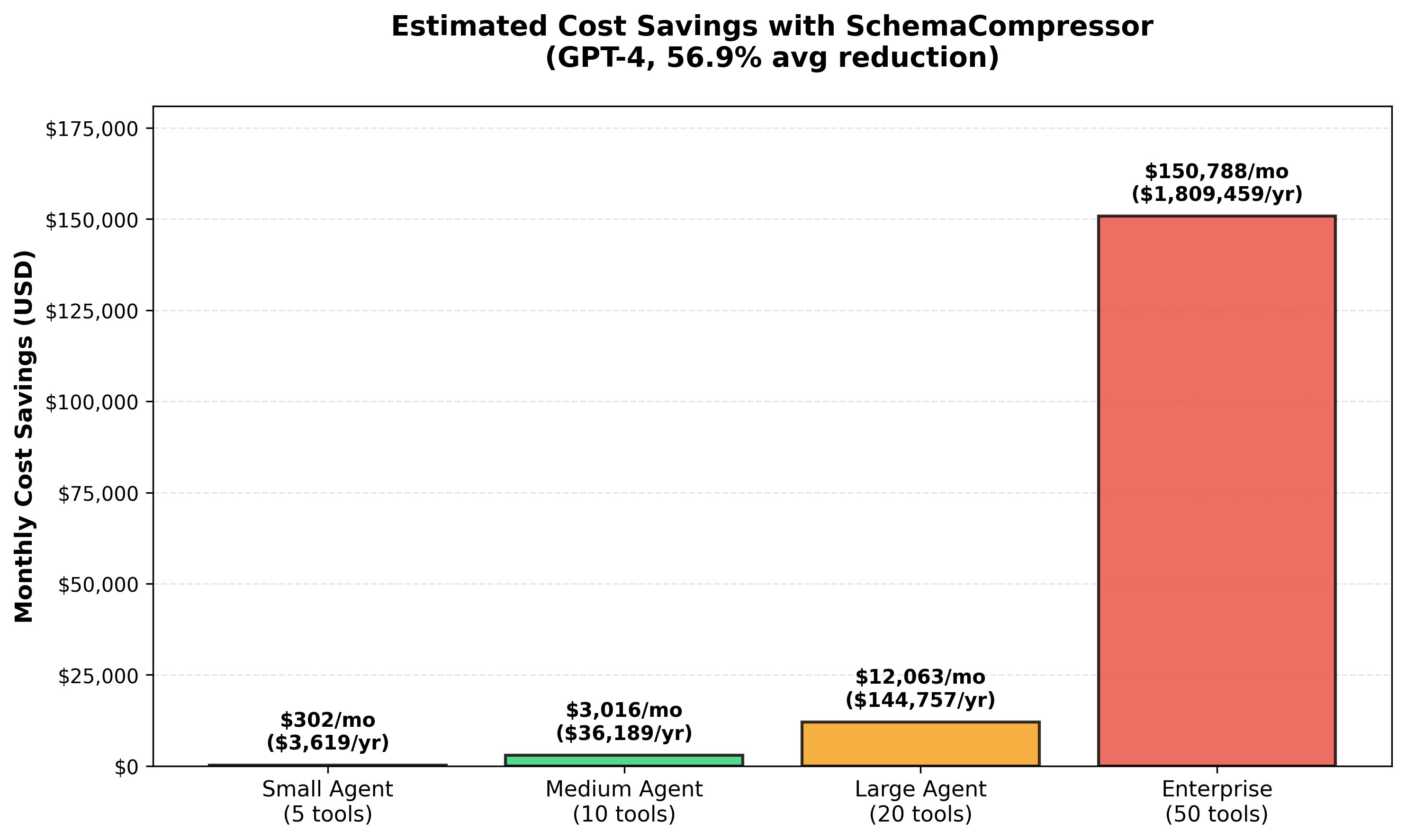

Cost Savings Projection

Estimated monthly savings for different agent sizes (GPT-4 pricing)

Cost Savings Examples

Real-world cost savings for AI agents with function calling:

| Agent Size | Tools | Calls/Day | Monthly Savings | Annual Savings |

|---|---|---|---|---|

| Small | 5 | 100 | $44 | $528 |

| Medium | 10 | 500 | $541 | $6,492 |

| Large | 20 | 1,000 | $3,249 | $38,988 |

| Enterprise | 50 | 5,000 | $40,664 | $487,968 |

Based on GPT-4 pricing ($0.03/1k input tokens) and 56.9% average reduction

Why This Matters

Function calling is one of the biggest sources of token consumption in AI agent systems. Verbose tool descriptions can consume thousands of tokens per request. SchemaCompressor optimizes documentation while preserving 100% of the protocol specification, making it completely safe to use in production.

What Gets Compressed

SchemaCompressor optimizes:

- ✅ Description fields (main source of verbosity)

- ✅ Redundant explanations and examples

- ✅ Marketing language and filler words

- ✅ Overly detailed parameter descriptions

SchemaCompressor NEVER modifies:

- ❌ Function name

- ❌ Parameter names

- ❌ Parameter types (string, number, boolean, etc.)

- ❌ Required fields

- ❌ Enum values

- ❌ Default values

- ❌ JSON structure

Running the Benchmark

Want to validate these results yourself?

# Install dependencies

uv sync --group dev

# Run benchmark (no API key needed!)

cd benchmark/function_calling

python benchmark_schemas.py

# Generate visualizations

python visualize_results.py

Cost: $0 (local token counting with tiktoken) Duration: ~1 minute

Results are saved to benchmark/function_calling/results/:

- schema_compression_results.csv - Full results table

- before_after_examples.md - Top 3 examples with comparisons

- plots/ - Visualization charts

View Full Function Calling Benchmark Documentation →

RAG & Text Optimization

This benchmark validates token reduction and quality preservation for RAG applications and text optimization use cases.

Overview

The benchmark measures two critical factors:

- Token Reduction - How much we can reduce prompt size (cost savings)

- Response Quality - Whether responses remain semantically equivalent

Quality is evaluated using two methods: 1. Cosine Similarity - Semantic similarity of response embeddings (0-1 scale) 2. LLM Judge - GPT-4 evaluation of response equivalence

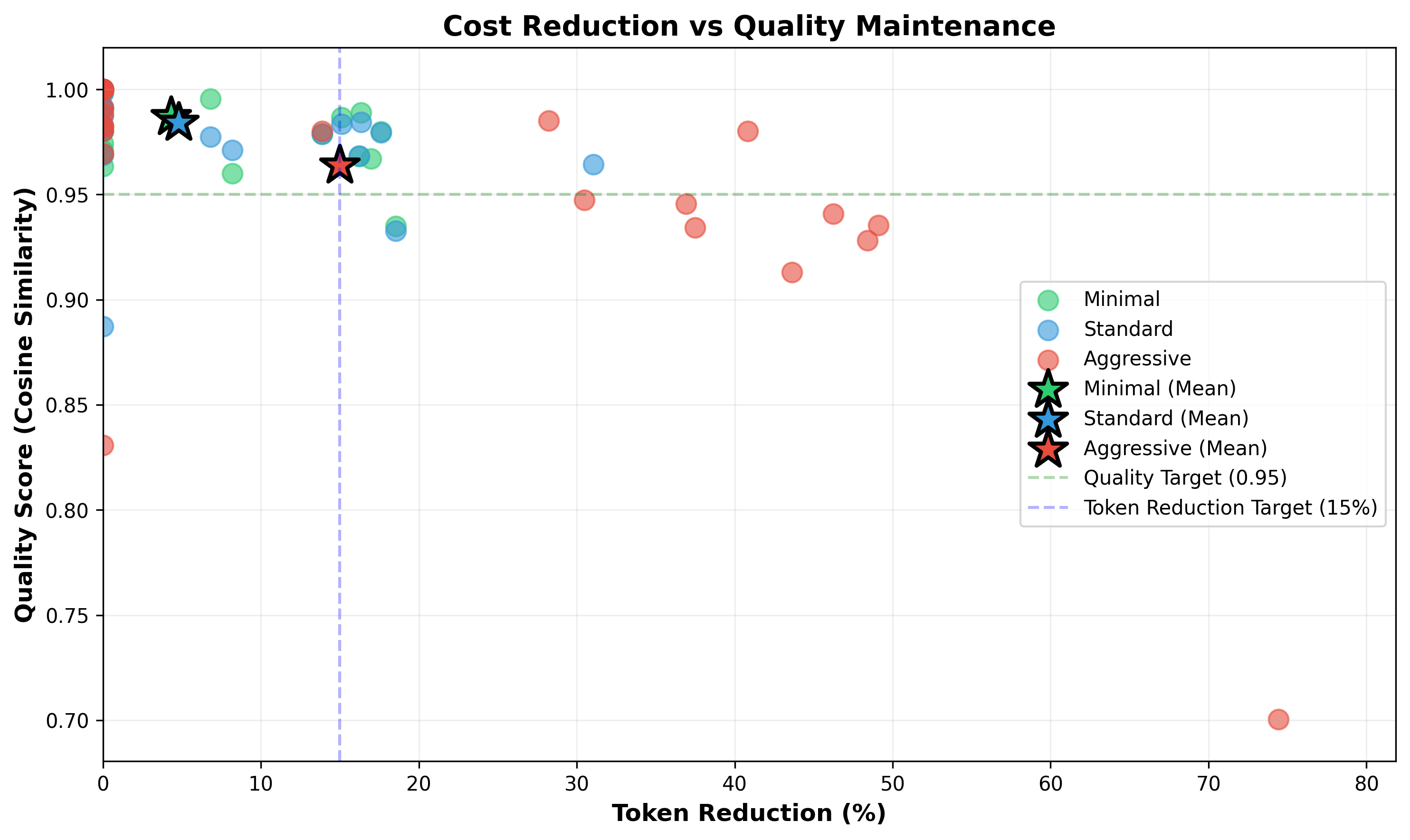

Results Summary

We tested 3 optimization strategies on 30 test cases (15 SQuAD Q&A pairs + 15 RAG scenarios):

| Strategy | Token Reduction | Quality (Cosine) | Judge Approval | Overall Equivalent |

|---|---|---|---|---|

| Minimal | 4.3% | 0.987 | 86.7% | 86.7% |

| Standard | 4.8% | 0.984 | 90.0% | 86.7% |

| Aggressive | 15.0% | 0.964 | 80.0% | 66.7% |

Strategy Definitions

Minimal (Conservative cleaning):

Standard (Recommended for most use cases):

Aggressive (Maximum savings):

pipeline = (

StripHTML()

| NormalizeWhitespace()

| Deduplicate(similarity_threshold=0.85)

| TruncateTokens(max_tokens=150, strategy="head")

)

Key Findings

🎯 Standard Strategy: Best Balance

The Standard strategy offers the best balance: - 4.8% token reduction with minimal quality impact - 90% judge approval - highest among all strategies - 0.984 cosine similarity - nearly perfect semantic preservation

💰 Cost Savings

Real-world cost savings for production applications:

Input cost: $0.01 per 1K tokens

| Volume | Minimal (4.3%) | Standard (4.8%) | Aggressive (15%) |

|---|---|---|---|

| 100K tokens/month | $4.30 | $4.80 | $15.00 |

| 1M tokens/month | $43 | $48 | $150 |

| 10M tokens/month | $430 | $480 | $1,500 |

Input cost: $0.03 per 1K tokens

| Volume | Minimal (4.3%) | Standard (4.8%) | Aggressive (15%) |

|---|---|---|---|

| 100K tokens/month | $13 | $14 | $45 |

| 1M tokens/month | $129 | $144 | $450 |

| 10M tokens/month | $1,290 | $1,440 | $4,500 |

📊 Performance by Scenario

RAG Scenarios (with duplicates and HTML): - Minimal: 17% reduction on average - Standard: 31% reduction on average - Aggressive: 49% reduction on complex documents

SQuAD Q&A (clean academic text): - All strategies: 2-5% reduction (less messy data = less to clean)

Key Insight

Token savings scale with input messiness. RAG contexts with HTML, duplicates, and whitespace see 3-10x more reduction than clean text.

Visualizations

Token Reduction vs Quality

The scatter plot shows each strategy's position in the cost-quality tradeoff space. Standard strategy achieves near-optimal quality while maintaining solid savings.

Test Dataset

The benchmark uses 30 carefully curated test cases:

SQuAD Samples (15 cases)

Question-answer pairs with context covering: - History ("When did Beyonce start becoming popular?") - Science ("What is DNA?") - Geography, literature, technology

RAG Scenarios (15 cases)

Realistic retrieval-augmented generation use cases: - E-commerce product catalogs with HTML - Documentation with excessive whitespace - Customer support tickets with duplicates - Code search results - Recipe collections

Running the Benchmark

Want to validate these results yourself?

Prerequisites

# Install dependencies

uv sync --group dev

# Set up OpenAI API key

cd benchmark/rag_quality

cp .env.example .env

# Edit .env and add your OPENAI_API_KEY

Run the Benchmark

This will:

1. Test 30 cases with 3 strategies (90 total comparisons)

2. Generate detailed report with visualizations

3. Save results to ./results/ directory

Estimated cost: ~$2-5 per full run (using gpt-4o-mini)

Advanced Options

# Use a different model

python benchmark.py --model gpt-4o

# Test specific strategies only

python benchmark.py --strategies minimal standard

# Use fewer test cases (faster, cheaper)

python benchmark.py --limit 10

Recommendations

Based on benchmark results:

For Production RAG Applications

Use Standard strategy - Best balance of savings and quality

For High-Volume, Cost-Sensitive Applications

Consider Aggressive strategy if 15% cost reduction outweighs slightly lower quality

pipeline = (

StripHTML()

| NormalizeWhitespace()

| Deduplicate(similarity_threshold=0.85)

| TruncateTokens(max_tokens=150)

)

For Quality-Critical Applications

Use Minimal strategy for maximum quality preservation

Latency & Performance

"What's the latency overhead?" - Negligible. Prompt Refiner adds < 0.5ms per 1k tokens of overhead.

Performance Results

| Strategy | @ 1k tokens | @ 10k tokens | @ 50k tokens | Overhead per 1k tokens |

|---|---|---|---|---|

| Minimal (HTML + Whitespace) | 0.05ms | 0.48ms | 2.39ms | 0.05ms |

| Standard (+ Deduplicate) | 0.26ms | 2.47ms | 12.27ms | 0.25ms |

| Aggressive (+ Truncate) | 0.26ms | 2.46ms | 12.38ms | 0.25ms |

Key Performance Insights

- ⚡ Minimal strategy: Only 0.05ms per 1k tokens (faster than a network packet)

- 🎯 Standard strategy: 0.25ms per 1k tokens - adds ~2.5ms to a 10k token prompt

- 📊 Context: Network + LLM TTFT is typically 600ms+, refining adds < 0.5% overhead

- 🚀 Individual operations (HTML, whitespace) are < 0.5ms per 1k tokens

Real-World Impact

10k token RAG context refining: ~2.5ms overhead

Network latency: ~100ms

LLM Processing (TTFT): ~500ms+

Total overhead: < 0.5% of request time

Performance Takeaway

Refining overhead is negligible compared to network + LLM latency (600ms+). Standard refining adds ~2.5ms overhead - less than 0.5% of total request time.

Running the Latency Benchmark

The latency benchmark requires no API keys and runs completely offline:

This will: 1. Test individual operations at multiple scales (1k, 10k, 50k tokens) 2. Test complete strategies (Minimal, Standard, Aggressive) 3. Report average, median, and P95 latency metrics 4. Show per-1k-token normalized overhead

Cost: $0 (runs locally, no API calls)

Duration: ~30-60 seconds

Learn More

- View Quality Benchmark Documentation

- View Latency Benchmark Documentation

- Browse Test Cases

- Examine Raw Results

Contributing

Have ideas to improve the benchmark? We welcome: - New test cases (especially domain-specific scenarios) - Additional evaluation metrics - Alternative refining strategies - Multi-model comparisons

Open an issue or submit a PR!